Scaling, modularity and the question of blockchain longevity

An attempt at deciphering the future of Ethereum through digging deep into zkEVMs, Modular Layers, Middleware, L3s and more

* DISCLAIMER: None of this is financial advice, I was not financially incentivized to write any of this and I do not possess investments in anything mentioned aside from ETH. What you are about to read is purely a hobby of mine, an act of my free time that could’ve otherwise been spent playing Overwatch 2. This report goes well beyond the email limit, so if you’re reading through an email browser you will have to visit my blog directly to read the whole thing. Trust me - it’s well worth it. You can skip the first section if you want to avoid the recap and lame story I begin with, but it helps shape the narrative and I think it’s fun. I’d like to give a special shoutout to @brianfakhoury and @0xCoinjoin for taking the time to proofread and share some much needed feedback, I really appreciate it. *

A review of past events

There’s a really tall building under construction near where I live. At night I like to look at it because each floor is lit up by hundreds of fluorescent light bulbs, only visible because they haven’t built the walls yet. It looks like an industrial Christmas tree, a monolith of an era that humanity might be leaving as we take the first cautious steps into what’s crudely defined as the metaverse.

In the late 1800s, the first skyscraper was built. At this point in the United State’s life, there were only about 50 million citizens, a far cry from the 330 million or so that were recorded in 2021. What’s changed since then? Society is torn between the digital and the physical - we might spend the majority of our daily life staring at a screen, but find ourselves tethered to the physical world we’ve always known. The pandemic opened the eyes of an entire planet to what a digital life might resemble down the line. Remote work, incessant zoom calls, relative sovereignty and online grocery delivery. Yuck.

In the future we’ll probably look back on this time period and laugh, just like we look at the early versions of computers or the internet and wonder how we used to function with these prehistoric relics. You can say that life has returned to a level of normalcy since most of the world cut the leash on pandemic restrictions, but there’s something a little off about it all. Elon Musk is running Twitter, Mark Zuckerberg is creating a dystopia (that might be a massive failure) and Jeff Bezos is whacked out of his mind on whatever steroids $100 billion can get you - what’s going on?

Like I said earlier, we’re in a weird middle period straddling full technocracy powered metaverse globalization and the pre-internet world characterized by dull, monotonous and fragmented physical life. Even cities don’t feel the same anymore, unless you’re young and somewhere heavily populated with a lot of culture (see: Miami, New York City, Chicago and so on). It feels like we keep doing the things we do out of habit, rather than enjoyment. Sure, it’s nice to go out and see friends or hang with a coworker in the office on occasion, but most of what really concerns us is online. Where do we go from here?

Just like when the first skyscraper was built - a 10 story building that looked out of place in 19th century Chicago - our digital counterparts (selves, personalities, devices) feel out of place as they’ve slowly mutated in our daily lives.

If you’ve ever stepped outside, you’ll know that these new structures weren’t uncommon for very long - it’s almost impossible to enter a city without seeing dozens or hundreds of skyscrapers. They became the new normal. While things might look or feel weird now, it’s likely that technology integrates and becomes more natural to our way of being, just as it has for the entire history of humanity.

To use a tired analogy, if you went back in time 100 years and showed someone a smartphone more powerful than any technology available, they’d faint. The same individual would collapse and stop breathing if you were to tell them almost every person on the planet has one of these, but the concept doesn’t really strike us as anything extraordinary.

Maybe in 100 years someone from the metaverse will time travel and explain to us that spending 20 hours a day with virtual reality goggles strapped on isn’t really that odd and that no, of course it isn’t weird to witness your first born child’s birth with virtual reality goggles on - how else could you be expected to meet your daily advertisement viewing quota for Zuck?

I’m trying to make a long story short, but the point is this: just as skyscrapers became ubiquitous in urban living, so will technology of all forms, whether we’re discussing the internet, cryptocurrency or artificial intelligence. And just as buildings evolved into monolithic structures confined to one purpose (housing, single business, manufacturing) evolved into modular hyper structures of the urban revolution (multiple businesses in one skyscraper, luxury living scaled vertically), so will our precious blockchain Ethereum.

Do you remember how we got to this point?

In crypto’s most recent cycle, we saw a ridiculous amount of Alternative Layer 1 “Ethereum Killers “ pop up, attracting tens of billions of dollars in TVL and inciting thousands of Twitter threads from VCs describing them as the messiah sent to deliver us from Ethereum’s sins. This was fine for a while, the alt L1 tokens went up a lot, the ecosystems boomed, opportunities were created and new crypto users were able to affordably transact in these environments. Then the music stopped.

Looking at the DeFi TVL of these alt L1 ecosystems in present day, we can see these Ethereum Killers have taken on the role of pacifists, complacently idling by as development amongst Layer 2 and Modularity-based teams continue on at a rapid rate. To play devil’s advocate here, I’ll say that alt L1s aren’t inherently bad - many of them have innovative designs that could lead to dominance of a crypto niche down the line. The issue is that they don’t have enough of a draw to attract away Ethereum users and Ethereum’s dominant market share of DeFi TVL.

Looking at these alt L1s, we can immediately see they’re fighting a cultural battle from their centralized validator sets alone. In Ethereum’s case, there are over 420,000 validators powering one of the greatest experiments in distributed systems of all-time. No other alt L1s come close, with Solana taking the second place spot with a whopping 3,400 validators.

This might not sound like a huge problem right now, but one of the core tenets of crypto is decentralization - there’s a reason Ethereum was created with a focus on security and decentralization, a design choice that is desperately needed in anything that markets itself as a better alternative to the current king of the blockchains.

Looking past the validator sets of alt L1s, another reason they’ve failed is fairly obvious in hindsight - the money printer turned off. In easy times (early 2020-early 2022), any inexperienced crypto investor or trader could throw money at a coin and be fairly confident it pulls at least a 5x in a reasonable timeframe. This fueled hysteria, extremely overoptimistic mindsets and above all, greed. I’m not saying these were unjust pumped of alt L1s and their ecosystem tokens, but they stand today as a graveyard of a bygone era. Just take look at the APT (Aptos) chart if you want to know how launching an alt L1 will go down in the current crypto environment.

These alt L1 TVLs are down a ridiculous amount, even when accounting for drawdowns in native token deposits and not just the ludicrous -85% charts when denominated in USD. Looking further at these alt L1 ecosystems, the top 10 protocols are more often than not a hybrid of 1 or 2 successful native apps, a cross-chain implementation of a Uniswap / Aave type (Started on Ethereum) application or some kind of “shell of its former self” app that suits no purpose but still holds a decent chunk of user TVL for some reason unbeknownst to me. It’s my belief that from here on out, somewhere around 90% of developers will actively build on a Layer 2 or Ethereum, and why shouldn’t they? Just look at how Arbitrum and Optimism have performed in recent months relative to the Ethereum Killers.

These L2s have even managed to build communities of passionate users, one of the core reasons why alt L1s were able to do so well. Without the disciples, there can be no evangelization. It isn’t the greatest or most quantitative example of growing L2 dominance, but it serves as a stark contrast to what’s been playing out (and what will continue playing out) across alt L1s.

This isn’t to say they haven’t been killing it in a variety of other ways, as evident through Polygon and Solana’s next level business development and serious partnerships. I’ll touch on Polygon a bit more in the next section, particularly focusing on their zkEVM and how it might compete with some of the numerous other zkEVMs.

But enough of the background, it’s time to focus on where we are today and what’s to come. As it stands, Ethereum has completed the highly anticipated merge to PoS, with a series of upgrades planned for years to come (The Surge, Verge Purge and the Splurge). Here’s a good infographic of what these upgrades entail (Vitalik tweet link).

Later on in this report I’ll touch on some of these terms, particularly sharding and EIP-4844, a small step towards Ethereum’s endgame. Looking at this next chart, Post-Merge Ethereum is the only L1 that’s able to produce a positive profit margin - it isn’t even a competition (Except for Binance Smart Chain, but I’ll let @0xgodking handle that cesspool).

Even more promising, development around zkEVMs, modular solutions, middleware and even L3s has been taking Twitter by storm in the past month. It is my belief that Ethereum will come out on top as the superior and #1 blockchain that will possess the lion’s share of DAUs and TVL for every kind of crypto application that has come and that’s yet to come. The purpose of this report is to explain why I feel the way I do, how these promising pieces of technology work and what this means for the average crypto user as they navigate these next few years.

Looking into the tech

Here we are.

Let’s say you’re a fairly knowledgeable crypto user that spends your time browsing Crypto Twitter. You follow Inversebrah, Cobie and Hentaiavenger66 and consider yourself fairly adept at navigating the trials and tribulations that come with a nascent crypto market. Maybe you made a lot of money in the bull run and are sitting cozy or somewhat less cozy than you were earlier this year, but you’re chilling. In the bull run, you didn’t have to know a lot to be successful. Between the GMs, positive vibes and brinks truck of dough you were making for clicking buttons, there wasn’t much time to research the mechanisms that paid for your Lamborghini. These days, there isn’t as much for you to do on Twitter.

Maybe you’re working for a DAO or a new protocol that’s launching soon, trying to pull in new users from Twitter who just want to reclaim their lost gains. Maybe you’ve tried your hand at researching stuff like MEV, only to find you aren’t nearly as smart as the market made you think. You probably have a decent understanding of what an optimistic or zero-knowledge rollup is and why they’re necessary. Hell, maybe you got a fat bag of OP and passed on your governance tokens to a more upstanding citizen of the blockchain. Regardless, you are slowly realizing you don’t have an edge.

People are constantly tweeting about zkEVMs and why they’re the future as you nod along and like all their tweets. In the back of your mind, I know you’re asking yourself why a zkEVM needs to exist in the first place and why there should be more than maybe two of them. And I’m almost positive you’ve probably wondered why there are zkEVMs if we already have optimistic rollups that are doing good - Ethereum doesn’t even have a shot at reaching mass adoption for like five years, so it’s unclear why developers are thinking so far ahead.

Maybe you see people tweeting about concepts that don’t make any logical sense. You ask yourself: “What the hell is data availability? Why are we worried about this all of a sudden, doesn’t the problem lie in Ethereum’s low TPS? L2s handle execution and solved that issue, why are we worried about something that isn’t even a problem? And if L2s are so damn good, why do we even need modular layers? If Ethereum has these huge plans to implement sharding, what’s the point of something like Celestia if it’ll just get phased out in a few years? And why in the fuck are L2 devs tweeting about Validiums, Volitions and L3s if we haven’t even onboarded a large amount of users to the same L2s that have solved all our problems?”

Believe me, the current state of Twitter is less than ideal for someone who has limited time and patience to dig through podcasts, medium articles, documentation and hundreds of Twitter threads just to get some kind of an answer to these questions. I’m not saying it’s impossible to understand everything, but there aren’t many (if any) resources out there that have pooled all of this information together to create an understandable presentation of it.

Until now.

The truth is, I’m not an expert on any of this. L2s, L3s, zkEVMs, modular layers and complicated middleware is all way out of my comfort zone. But what fun is there in being complacent, especially when the stakes are so high? Over the past few weeks, I’ve been taking a lot of notes, doing more reading than I ever have before and preparing myself to try and summarize the future of Ethereum as it becomes a modular powerhouse. It hasn’t been easy.

I’m fairly confident I will get a lot of things wrong in this report, but I don’t mind. I am not claiming to be an expert on scalability, cryptography, complex mathematics or even a master of the blockchain - I am just a hungry learner who wants to help others gain an understanding of how a modular Ethereum might change everything. I urge you to comment on this post or reply to my tweets with as many critiques as you can find. Through summarizing difficult to immediately digest information in a format that’s fit for the average crypto user (like myself), I think the entire space can benefit by leveling the playing field.

I wrote this report with a goal of making it the most technical one yet, but forgive me if I don’t dig as deep as you want. My main purpose in writing this was to make sense of all these moving parts and how they’ll come to work together down the line. I’ve done a lot of reading, revising, scouring, note taking and painstakingly arrived at what you are about to read.

Grab two cups of coffee, we’re about to kick things off.

ZK Rollups and zkEVMs

We all know what the EVM is, the all-powerful environment that powers the machine and executes our shitcoin-filled transactions. But what about a zkEVM? Put simply, a zkEVM uses ZK-SNARKS to create proofs that validate Ethereum transactions, with a goal of making a zero-knowledge rollup (ZKR) to handle execution. I’ve talked about zero-knowledge proofs in the past so I’ll avoid rehashing the specifics today. To give an analogy, a zero-knowledge proof is an exchange between two parties (a prover and a verifier) where information must be proven without being revealed.

Zero-knowledge proofs are superior to the fraud proofs utilized by Optimistic rollups (ORs) because they are smaller (less intensive) and more efficient. Fraud proofs require “watchers” to keep an eye out for malicious behavior while zero-knowledge proofs only rely on magical mathematics, cryptography and maybe development teams cracked out on Red Bull to deliver faster execution and cheaper transactions.

As discussed by Alex Connolly in this excellent report, the development of zkEVMs has less to do with EVM-compatibility than it does with Ethereum’s unique tools. Some of these include Solidity (programming language) and Ethereum’s token standards (ERC20 and ERC721). Connolly cites Polygon as an example of using Ethereum’s tooling to its advantage, without the hassle of developing from the ground up like Solana or Near.

The EVM is the most widely used runtime environment, so why reinvent the wheel? Optimism is a great example of this, as they had been working on their own runtime environment - the Optimism Virtual Machine (OVM) - and having issues creating a transpiler to move from the OVM → EVM. I’m not an expert, so here’s a great thread from @jinglejamOP that tells the story.

In a Mirror post, Optimism said it themselves: “We’ve learned a lot about how scalability relates to the developer experience—namely, by getting out of the way and letting Ethereum do its thing.” Through the lessons they learned, Optimism announced a future upgrade to deliver Cannon, an EVM-Equivalent fault proof. But enough of ORs, back to the weird shit.

At this current point in time, there are four types of zkEVMs described by none other than our three-legged friend Vitalik in this excellent post.

These include Ethereum-Equivalent, EVM-Equivalent, EVM-Compatible and Solidity Compatible - but what’s the difference between these and what tradeoffs are made? Starting with Ethereum-Equivalent zkEVMs, these are practically identical to Ethereum in almost everything (Known as a Type 1 zkEVM). They aren’t entirely practical yet, as teams are still working on crossing the chasm between Type 3 and Type 2 zkEVMs, but nonetheless Type 1 zkEVMs are pretty cool in theory and might one day come to fruition. One of the only downsides of a Type 1 zkEVM is the longer times required to produce these blocks.

Moving on, we have EVM-Equivalent zkEVM (Type 2), the holy grail of zkEVM development. A Type 2 zkEVM can operate faster than a Type 1 by abstracting away the processes of Ethereum that are unnecessary for their goal. Scroll is an example of a Type 2 zkEVM, as it follows the methods of Ethereum 1:1, the only difference being the runtime environment (zkEVM v. EVM). How does Scroll accomplish this?

According to this post from the team, Scroll consists of 3 distinct parts: the Scroll Node, Roller Network and Rollup / Bridge Contracts. As I mentioned, Scroll differs from Ethereum in its processing of opcodes (instructions through code for various operations). To break through this brick wall, Scroll is “building application-specific circuit (“ASIC”) for different DApps” to connect with their zkEVM runtime environment. It’s a tall task, but I believe they’re up to the challenge.

Another Type 2 zkEVM is being developed by Polygon, with a recent announcement of test-net launching. While similar to Scroll’s architecture, the Polygon zkEVM adds an extra step by sending EVM opcodes through their zkProver, stating: “EVM Bytecodes are interpreted using a new zero-knowledge Assembly language (or zkASM).”

Where Scroll works to process EVM opcodes without an extra layer, the Polygon zkEVM adds an extra step to make their life a little bit simpler, maybe reducing compatibility a little bit in the process. Both teams are hard at work and I’m anxious to see how they perform once fully launched.

Type 2 and Type 3 zkEVMs (EVM-Equivalent and EVM-Compatible) are fairly ambiguous in identification right now, mainly because different individuals might debate on the categorization of Scroll and the Polygon zkEVM - shit is just too complicated and the categorization system isn’t entirely set in stone. Because of this, I’ll skip directly to zkSync and Starkware, two examples of the highly performant Solidity-Compatible (Type 4) zkEVMs.

Type 4 zkEVMs are fairly different in structure to Ethereum, but offer the highest performance in exchange for reduced compatibility. As you might know, zkSync is preparing for its full launch of alpha (maybe a token) and Starknet main net is live with constraints set on ETH deposits (a mini alpha of sorts).

While Starknet doesn’t natively support Solidity (yet), they are able to get around this with some help from the Nethermind Transpiler. Solidity code is transpiled through this into a programming language StarkWare created - Cairo - that then runs this transpiled code through the Cairo zkEVM. Solidity is the most popular smart contract development language, giving Starknet the benefit of smoother developer onboarding and the increased performance specific to a Type 4 zkEVM.

One issue Alex Connolly cites with Starknet is the fact that most programs currently available have been written in Cairo - potentially raising concerns for future developers that write in Solidity. While this is more of a “we’ll cross that bridge when we get there” problem, it’s worth noting and keeping an eye on.

Looking at zkSync, their process is fairly similar to Starknet’s with a few key differences. Just like Starknet, zkSync converts Solidity into Yul, which gets sent to their LLVM compiler and eventually makes its way to their custom virtual machine, the SyncVM - or is it the ZincVM? Either way, these processes are far from 1:1 compatible with Ethereum, but speed is key in this world of 0s and 1s.

A shared factor of Type 4 zkEVMs is their “zk-ification” of the EVM. With zkSync and Starknet, they don’t stress over the complicated process of fine-tuning the EVM to make it work with zero-knowledge proofs. By making their own zkEVM, they can trade the sweat and tears of technical complications in exchange for faster performance with a little more elbow work in developing their own workarounds.

While time will tell what type of zkEVM comes out on top, the performance of technologically inferior ORs gives a fairly strong indicator that ZKRs will do very well for themselves. All that’s left to do now is wait.

Validiums and Volitions

This part is a bit more complicated, but I promise it will make sense afterwards. Remember when I mentioned L3s? Allow me to introduce you to Validiums and Volitions, the next step for ZKRs like Starknet and zkSync.

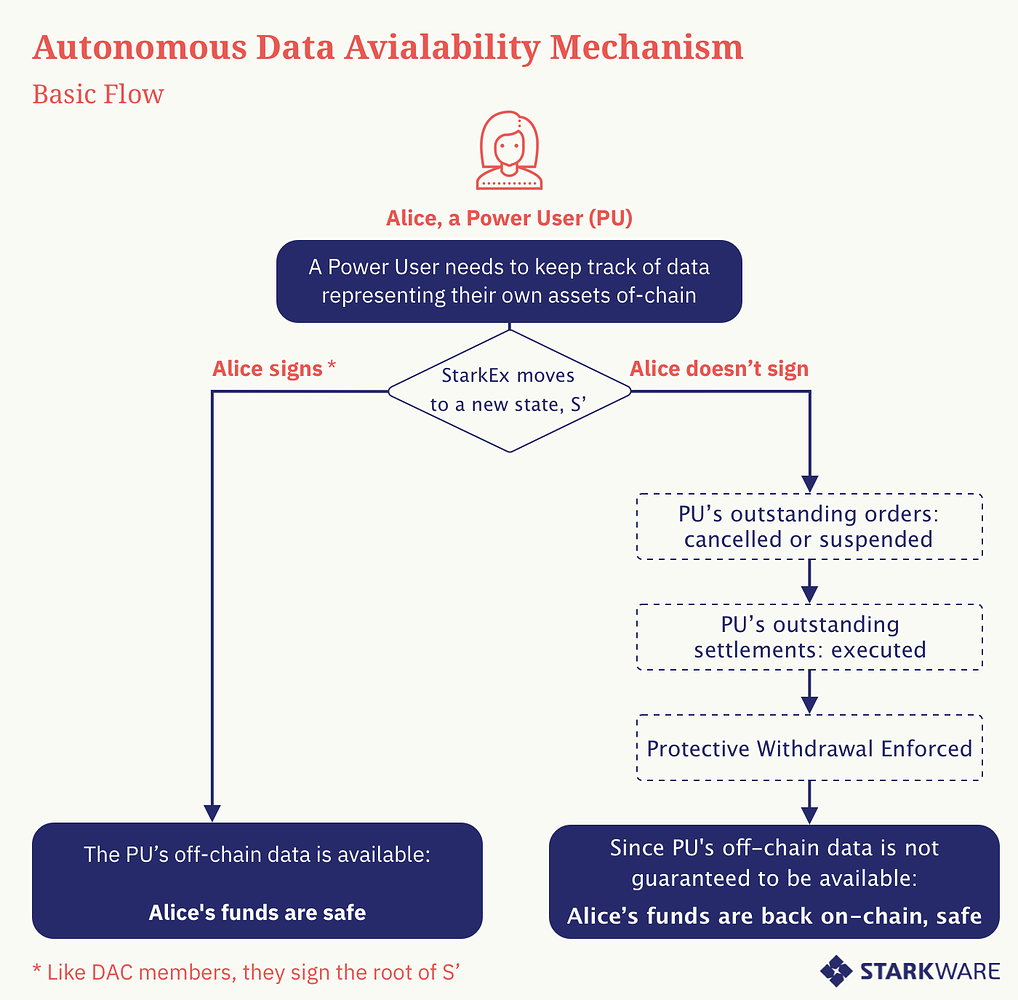

A validium is essentially a ZKR with one key difference - data availability is handled off-chain as opposed to on-chain processes that might use a data availability committee (DAC). But why are validiums necessary and how do they fit in with an L2? For starters, here’s a graphic created by Starkware that outlines a potential future for Starknet.

As you can see, Starknet (the ZKR) sits as an L2 connecting to L3 nodes - StarkEx is an example of a validium, an L3 that generates STARK proofs off-chain (with proposed TPS between 12,000 - 500,000 dependent on type) that can then be sent to Starknet to receive verification. A validium is an application-specific rollup, which you might recognize if you’re familiar with Immutable X or dYdX. Why is this so cool? The validium is able to provide just a single verification, making transactions cheaper, faster and seriously upping the TPS of a standard ZKR. Here’s one of my favorite graphics that shows the potential for L3s - it actually inspired me a while back to look into what an L2 was.

As you can see, ZKRs are up to 4x faster than ORs, while something like zkSync’s proposed ‘zkPorter’ can be up to 10x faster than even a ZKR. Also a volition, zkPorter is Matter Labs’ proposed L3 for zkSync, also operating just like StarkEx through its off-chain behaviors. The only downsides of an L3 are the decreased security they provide and the lack of shared liquidity - but they’re still more secure than an alt L1. How do they do it?

Ethereum’s L2s share security with mainnet Ethereum, but an L3 is a little bit detached (or too far visually) from this L1. Because these funds in a validium are held off-chain and data availability gets abstracted away from the ZKR, a user’s funds are essentially at the whim of whomever is working as an operator. In this post, Matter Labs stated: “data availability of zkPorter accounts will be secured by zkSync token holders, termed Guardians” while Alex Gluchowski said “StarkEx mitigates it by introducing a permissioned Data Availability Committee (DAC)."

Based off these two mechanisms, you can infer that a permissioned set of eight individuals (StarkEx) and a slashing mechanism for dishonest operators (zkPorter) add trust assumptions that a user must consider when opting for higher throughput and lower transaction costs on validiums.

As you can already tell by the amount of articles and blog posts I’ve linked, it’s been extremely difficult to form a concrete opinion on anything involving ZKRs - mainly because things have changed so frequently and it’s tough to keep up with this and read through everything.

To end this section off, I’d be a fool to not mention volitions. It’s also been fairly tedious to find a straight answer on what a volition really is, so keep in mind that this might not be the most accurate definition. A volition is basically a hybrid solution between the ZKR and its respective validium, allowing for users to choose which they prefer to access. Volitions are nice because applications no longer have to choose between a) increased security of a ZKR or b) more customizability and higher throughput of a validium.

A concrete example of a volition would be the proposed Adamantium, an off-chain DA solution proposed by StarkWare where users can opt in on their own accord to manage their data availability. It’s supposed to be a bit more costly than the alternative of letting StarkEx’s DAC handle data availability, but it makes sense for users who want higher security of their funds. Here’s the graphic provided in this post which explains Adamantium more in-depth than I could.

Over the years to come, I expect zkSync and Starknet to absorb the market share of ORs and continue their fight for the #1 position until one of them finally wins out. This could be through better marketing, better technological advancements or something else entirely - it’s very difficult to say which is going to win out. I look forward to ZKR tokens, as I think there’s a pretty easy pair trade down the line that could be a layup.

Modular Layers

To avoid making this report even longer, I’ll only be talking about two modular layers: Celestia and Fuel. In this awesome post, Fuel defines a modular layer as “a verifiable computation system designed for the modular blockchain stack.” Originally developed as a rollup, Fuel has since transitioned into the first modular execution layer. Yes, you read right that. But you definitely read last that sentence wrong. And the sentence before this one too.

Okay, I’m sorry - I’ll cut it out.

Before you ask why there’s even a need for a modular execution layer or why Fuel is such a cool piece of technology, let’s take a step back. Remember how alt L1s rose in popularity because of the growing congestion of Ethereum with the subsequent rise in transaction costs? Many refer to this as the “execution bottleneck,” which L2s have temporarily solved. In a post-merge world, Ethereum has its sights on solving what is called the “data availability bottleneck.” At this point, Ethereum is sitting pretty. We’re in the middle (or beginning) of a bear market, transactions on mainnet are relatively affordable most of the time and ORs are enjoying some well deserved attention. What’s the problem?

According to this post that covers Eigenlayer (which I’ll talk about next) from Blockworks Research, Ethereum’s current data bandwidth is just 80 kb/s. With something like Eigenlayer, Ethereum could 200x its bandwidth to 15mb/s - a monumental increase. The topic of data availability is important as planned upgrades like EIP-4844 take steps towards a fully sharded Ethereum blockchain with a concept defined as “Proto-Danksharding.” While sharding (the splitting up of a network into unique ‘shards’) is still a long way from being implemented in Ethereum directly, Proto-Danksharding adds a new transaction primitive known as a “blob-carrying transaction.” Citing the increased likelihood that L2s are the future for Ethereum, the linked post discusses that instead of adding increased space for transactions (which is no longer necessary with L2s), it makes more sense to add increased space for data.

EIP-4844 highlights the fact that the execution bottleneck is far less of a concern than it was in the past - it’s time to focus attention on the data availability bottleneck. Let’s look at how Celestia helps solve the problem.

Celestia is a modular data availability and consensus layer that recently announced their raising of $55 million from prominent investors like Polychain Capital and Bain Capital - that’s pretty huge for a bear market. By using a concept known as “Data Availability Sampling,” Celestia can provide non-sharded blockchains with the power of sharding via data availability proofs. I’ve said the word proof too much, I know. I’ll also be referring to data availability as DA from now.

Celestia will allow for L1s or L2s to plug in this DA Sampling system and offload the task of managing DA, making rollups and L1 chains more efficient and better prepared to scale as the crypto user count grows.

As you can see, a DA Layer is a similar concept to an L2 operating as an execution layer, breaking Ethereum out of its monolithic chains into a more modular freedom. Celestia stands out because it was built with the Cosmos SDK, making it compatible with application-specific blockchains. A future could very well take place in which Celestia has horizontally integrated with every existing blockchain in operation, taking a chunk of revenues from each chain’s transactions. It sounds pretty cool to me.

In the short term, I have no doubts that Celestia will be widely used across every major L2, because there’s very little downside in doing so. Celestia has even defined this phenomenon as the creation of “sovereign rollups” - L2s that only require Ethereum mainnet for settlement. This is slowly becoming a reality, as L2s are growing independent ecosystems and communities that are distinct from Ethereum.

Sorry for straying away from Fuel like that - it was necessary to describe why modular execution layers are needed. Fuel is able to increase the execution capability of rollups through the decoupling of computation and verification. Usually a validator will handle both of these processes - applying state adjustments and confirming the validity of state adjustments - on its own, slowing things down. Fuel wants to speed shit up.

Like I said, the addition of a modular execution layer is not an attack on the technological advancements of L2s. In my opinion, it’s a positive! Think of it like this.

If L2s sucked, nobody would care about developing software to make them work better. The development and eventual release of Fuel is a testament to the likelihood that L2s are going to win out against alt L1s, making the case for a - you guessed it - modular Ethereum. Fuel consists of three pieces that make it stand out: the Fuel VM, Sway programming language and parallel transaction processing. You might recognize that last term if you’ve looked closely at Aptos, as they apply a very similar mechanism (if not the same kind) that increases throughput and improves efficiency.

Fuel’s monetary model is very similar - if not identical - to Celestia’s.

L1s, L2s, side chains and everything in-between can plug in the Fuel framework, improve execution and slide a portion of fee revenue back to Fuel as a thank you. Fuel is able to achieve this boost to execution through its abstraction of “the resource-intensive function of execution to powerful block producers.” As mentioned earlier, this is the ever important separation of computation and verification. Fuel uses fraud / validity proofs to avoid malicious behavior, this being the famous slashing mechanism which I hope you’re familiar with by now.

Altogether, modular layers like Celestia and Fuel work to improve the function of a monolithic blockchain and push it towards a fully modular future. Even better, these protocols may have tokens, which could be airdropped to test-net users. But hey, I’m just guessing here.

Middleware

We’re almost at the finish line. I promise.

When it comes to middleware, I only added this section because of one particular protocol: Eigenlayer. While they don’t have any documentation yet (aside from some big brained research papers), I was able to dig through Twitter threads and get a fairly solid understanding of the possibilities Eigenlayer enables.

Eigenlayer introduces a concept called “restaking,” essentially increasing the capital efficiency of staked ETH (like stETH or rETH) by allowing users to deposit this staked ETH into Eigenlayer contracts. From here, Eigenlayer can use the restaked ETH to secure basically anything, whether it’s an oracle, side chain or bridge. For more reading on the nitty gritty, here’s the aforementioned Blockworks Article and a link to Eigenlayer’s website with further reading.

While restaked ETH might sound like something that’s just begging to blow up, it’s not what you think. Your staked ETH validates Ethereum, earning you a yield in the process. While staked ETH validates Ethereum, you’re free to use it wherever you please! It’s highly liquid and basically every application supports a liquid staking derivative of ETH because it’s just engrained in crypto at this point. Just take a look at Lido’s TVL if you were skeptical of this.

Anyways, restaked ETH goes into the Eigenlayer contract and can now be used to secure or validate (uncertain on correct terminology) whatever you wish. Eigenlayer has even developed its own DA solution (EigenDA), which might come to be the most popular product they offer, at least initially. Part of what makes me so excited for Eigenlayer is an idea that came to me after reading through the Blockworks Article, spoken about briefly in my rambling mini-thread here. Cosmos is enabling what’s known as shared security, a protocol upgrade that helps Cosmos app-chains secure their network without incurring high costs. For the longest time (and still to this day), ATOM had almost no use-case. Once shared security goes live, ATOM can be used to validate the app-chains (Zones) within the ever-expanding IBC ecosystem.

Through the power of Eigenlayer, we can spin up side chains or new L1s that operate differently from Ethereum, all with the added bonus of shared security. Boom. I’d like to think that someone will build an oracle to challenge Chainlink, secured by ETH and built with the powerful Eigenlayer stack. I guess we’ll have to wait and see.

The good days are yet to come

I’d originally planned to use this section as a spot to list out some implications of what this all means - but I think that’s covered in the 5,000 words I’ve already written. If you can’t tell, I am extremely bullish on the future of Ethereum and the potential modularity unlocks. While other L1s might be able to do a better job at specific tasks (NFTs, GameFi), I think Ethereum will win out because of its ability to become an all-around killer.

I don’t know if every major country will adopt Ethereum as its global payment system.

I don’t know if Ethereum will 100x from here.

I don’t know if L2s will overtake Ethereum and make far less revenue than mainnet otherwise would as a monolithic chain.

So what do I know?

Well, I can say it would be fairly foolish to long pretty much any other alternative L1 token with the goal of said L1 beating out Ethereum over the long term. It doesn’t make any sense to me. I can say that modularity as a concept and various other modular layers (like dYmension and Saga) will spread to other blockchains as they desperately try to compete with the growing moat of Ethereum.

As it stands, most blockchain activity is concentrated on Ethereum and there aren’t enough indications to believe it will do anything but increase. I can’t form much of an opinion on what types of use cases crypto will have down the line, but I’ll say that DeFi is the coolest thing we have going for us. Making it more accessible through the lowering of transaction costs on L2s and the added bonus of Ethereum’s security will only increase the number of users breaking away from those nasty banks, no matter where they live.

I hope that this report was technical enough and I also hope it’s a good resource for people looking to explore everything that has to do with Ethereum and scalability. If this was helpful to you, please consider sending a tip to the knowerofmarkets make-it fund (but you don’t have to, of course): 0xA4BA02A9771d528496918C8d63c6B82Bf14f7E2D.

I’d cite my sources here, but I think I’ve added enough hyperlinks and you get the point. If I did forget to give credit to your article or graphic in some way, please message me on Twitter!

Thanks for reading, it means the world.

Well done! Wen part 2? Lolll

great read, ser!