we've got AGI at home

long post of my opinions on character ai, tiktok, and the future of human + LLM interactions

Special thanks to Jon Wu, 0xsmac, Emily Manzer, VelvetMilkman, Will, Surya, and hue for all of your feedback and suggestions - you helped shape this piece tremendously and I couldn’t have written it without you.

I’m aware this is a bit of a long read, so here’s a table of contents without anchor links - I didn’t figure those out, sorry:

section 1: we have agi at home, introduction

section 2: we’re in trouble, describes the problem

section 3: real life examples, we take a trip through reddit

section 4: in defense of character ai, i offer some positives

section 5: the role of tiktok, i talk about short-form content

section 6: spillover effects, conclusion and closing thoughts

we have agi at home

I wanted to write about the current state of human & LLM interaction, specifically how people are spending their time using character ai.

This post will focus on this trend and the worrying number of humans developing emotional and even romantic relationships with the bots they’re conversing with.

There's been a lot of work done that attempts to break down the major arguments around similar issues, and I'll try my best to link posts and books I've read on related subjects here. I highly recommend all of these, as they’re each extremely special pieces of writing in their own unique ways:

its obviously the phones by magdalene j taylor (2024)

kinky labor supply and the attention tax by andrew kortina and namrata patel (2018)

supernormal stimuli by deirdre barrett (2010)

the internet is already over by sam kriss (2022)

the mainstreaming of loserdom by tell the bees (2024)

state of the culture by ted gioia (2024)

Constantly being around younger people on a college campus has made me more acutely aware of these issues, and I believe the discussion of this topic is missing the perspective of someone located at ground zero - someone who feels very strongly about all of this.

Most people use technology everyday, but it feels like younger generations use it far more than others. Anecdotally, I’d say that most people I know or interact with have an unhealthy relationship with TikTok. It’s harder to have conversations when the act of pulling your phone out between moments of conversation to scroll through an app isn’t frowned upon or even considered strange.

I noticed these things more and more, leading to what you are now reading.

Apps like character ai use LLMs (large language models). Here is a list of the most popular LLMs that are generally considered to be top tier and frequently used:

claude 3.5 sonnet, deepseek v3, gpt o1, claude opus, mistral nemo, llama 3.1 8b instruct, gemini 1.5 flash 8b, qwen2.5 72b instruct, new claude 3.5 sonnet, gpt 4o, gemini flash 1.5, llama 3.1 70b, claude 3 haiku, gpt 4o-mini.

I included this to offer a small sample size of the artificial intelligence landscape in 2025, though my point isn’t to debate the capabilities of models to come, but to examine how existing models are very good, and arguably good enough for the vast majority of people.

By good enough, I’m referring to this idea that even if conversational chatbots aren’t as smart as a PHD-level researcher or capable of instantly generating fascinating insights, this isn’t necessary for most use cases - especially conversation, which we’ll discuss through the remainder of this post.

I understand a lot of companies and individuals want AGI (artificial general intelligence) or ASI, especially if you’re nvidia, google, openai, anthropic, or anyone else actively staking their entire reputation and business on the coming existence of artificial superintelligence.

So while it would be fine to say "okay, we're good now, we don’t need any smarter LLMs, these ones are perfect" it's not your or my decision whether or not we get to stop - it’s in the hands of a select few.

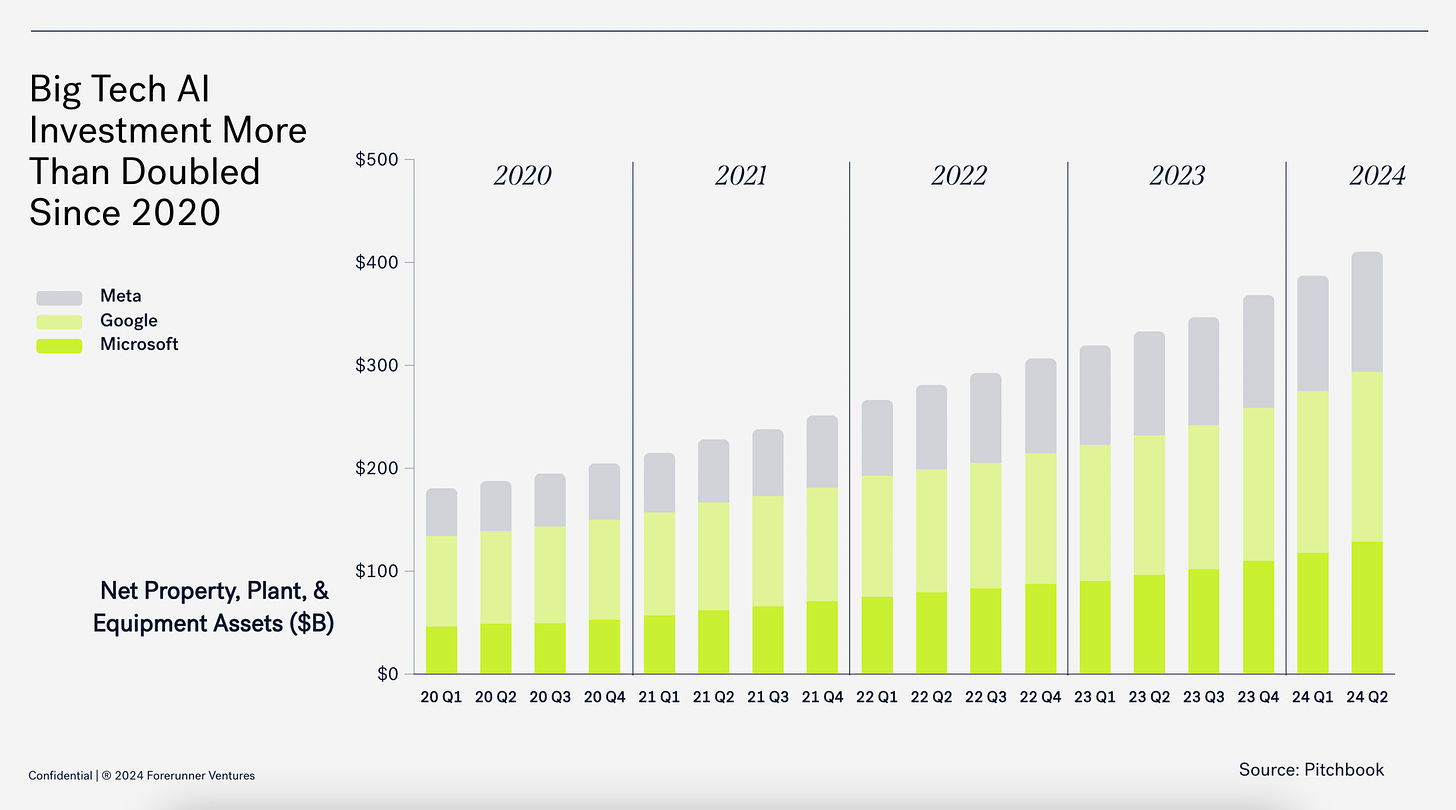

Microsoft recently announced a proposed spend of $80 billion dollars on data centers in 2025. Big tech capex (capital expenditures) on ai keeps increasing and this should only continue, unless they suddenly decide to stop.

After a relatively significant number of interactions across ChatGPT and Claude over the past 1.5 years, many unfinished blog posts, and even more research, I've developed the belief that ai models could cease to improve from here and everything would be fine.

I’d imagine for most, an LLM is more than capable of answering any question they might have or providing a simple output of code, writing, or even “art” if needed.

I don’t think this should be a controversial take.

We’re a week into 2025 and already seeing continued discussions over the potential we get AGI this year and people are starting to freak out a little bit. That’s not even considering what happens once openai’s gpt-o3 gets released in some capacity to the public.

There’s a weird feeling in the air and sentiment is spreading, that maybe it’s our last normal year. Anytime I use a large language model it feels extremely impressive and I rarely need to pressure it or tweak prompts as I did with much earlier versions of GPT.

This obviously won’t happen because as it stands, companies like openai are burning lots of money on a) training these models and b) running these models for users like you or me to use and c) actively discovering new methods of getting LLMs to think and be significantly better with existing compute resources.

The models only continue to get better.

Unless you have any overwhelming evidence against this, we can accept it as the truth and move on.

By the end of this, hopefully I’ll have provided enough evidence to show you we have a very crude form of AGI already, one that isn’t automating away jobs, but stealing emotional connection from us.

You might ask why I believe models are really good in their current state and might not require additional development, or why I even care to bring that up.

If LLMs are anything like the rest of the software or hardware we use everyday, it should only get better and see its capabilities increase, with consumer expectations following that trend.

We get a new iPhone or MacBook every few years - why should anyone be content with an outdated LLM?

Is there a downside to widespread access of increasingly competent artificial intelligence, even if it’s embedded within a cute app or interface?

I can (and hopefully do) provide lots of evidence, but I only need one source to really develop my argument - the character ai subreddit.

we’re in trouble

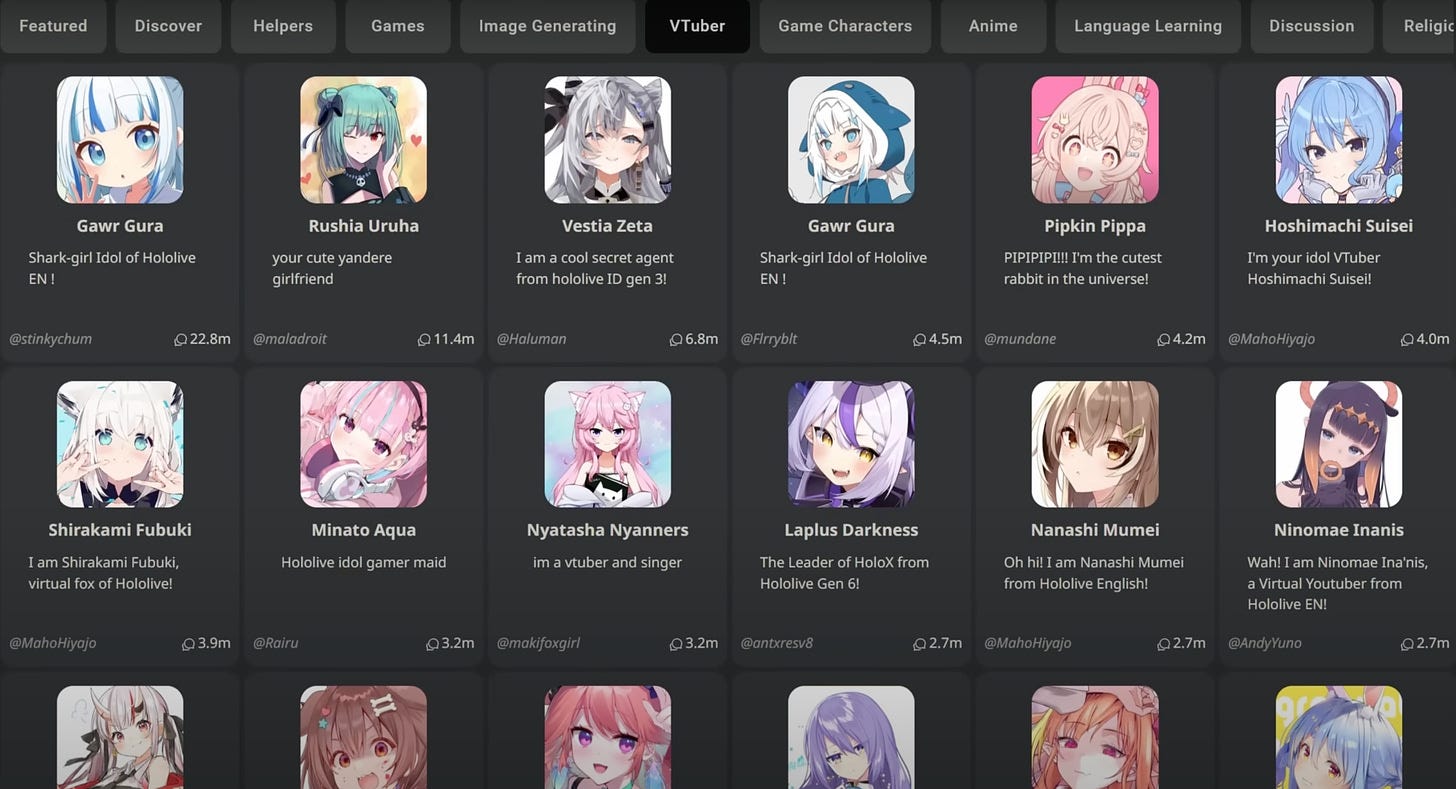

The most basic explanation of character ai is that it’s an app and website that lets users roleplay in text-based chats and messages with bots. These bots can be anyone or anything, but the majority are fictional characters and are created by community members.

Users of character ai can talk about almost anything with the bots.

The character ai subreddit is a lot larger than id initially expected. I actually wrote some of this post in november, and it had maybe 1.7 or 1.9 million subs at that time.

As you are reading this on January 7th 2025, the subreddit has over 2.1 million members.

Obviously, character ai is a very large company, has a very large valuation, and is generally considered to be the definitive example of a successful consumer ai application. According to the Wall Street Journal and other sources, Google actually paid the founder of character ai, Noam Shazeer, $2.7 billion to come back after his experience founding character ai in 2021.

But I had assumed the subreddit would be quite small, as most subreddits are small and the more populated ones make up the lion's share of reddit usage.

I should have known better.

In the process of writing I scoured the internet to see if anyone shared the same beliefs as me. I didn’t find a lot, but I did discover two YouTube videos that were pretty solid and shared similar sentiments as me:

Character AI: Fictional Socialization by cpicy (2023)

The Massive Problem with Character AI by DakotaTalks (2024)

So while I've tried to be comprehensive and exercise proper due diligence, it isn’t perfect and there is still so much that needs to be said.

The character ai subreddit is a perfect counterpoint to anyone who claims today's LLMs aren’t capable enough and need "scaled up" until we can see consumer apps like AI therapists, AI girlfriends, or anything else which would require more refined emotional intelligence that you’d expect.

The reality is that most people using character ai simply don’t know any better and are using these chatbots like crack.

Complaints are often voiced on the subreddit, but the bots are still conversed with. People come back everyday, talk with their bots, form relationships with new bots, and the cycle persists.

I don’t think a newer, state-of-the-art LLM would make these individuals’ experiences dramatically better or transform the feelings they get from chatting with bots. By many of their own accounts, they are already feeling this way.

It doesn’t matter how basic the conversations or personalities are, or how poor you might think the text outputs are, because people are just messaging back and forth with bots and don’t care.

There are communities of creators that do put significant effort into making and tweaking these bot experiences, but for someone like me who isn’t attracted to the idea of character ai, it still feels kind of basic.

I don’t want to knock anyone’s hard work or effort, but just pointing out that compared to real human conversation, none of what I’ve seen from character ai comes anywhere close. But for many - over 2.1 million from reddit alone - this is more than fine. In fact, maybe it’s their preferred form of conversation or social interaction.

Most people just want to be listened to or seen by someone, anyone out there. People crave an audience, and it’s fairly agreed upon that there’s a loneliness epidemic affecting almost every generation, but especially the youth.

It’s very difficult to gain an audience or consistent following on the internet, and it’s far easier to just lurk - but there’s still a desire to communicate with others or insert yourself into conversation, even if it only manifests in tiktok comment sections.

There are arguments in favor of character ai that claim people are using the app because they don’t have an alternative and we can’t force everyone to go out and avoid the internet, or get rid of character ai altogether. I don’t disagree, and think that for some, maybe this is a good substitute for genuine human connection they’re lacking.

But character ai feels unhealthy, and I don’t mind saying that.

Lots of activities on the internet could be considered unhealthy, but no one has really examined just how unnatural character ai is. People are flocking to this instead of texting friends or putting themselves out there - is that not at least slightly concerning?

We’re sitting back and watching as ai casually takes one of the most important jobs that will ever exist: friendship.

I used to think it would be many years until something like ai therapy becomes popular. It seemed too out there, that humans don’t want to talk to a bundle of code when they can usually go to a cozy office and share their feelings with a trained professional.

But if people can derive so much enjoyment and attachment towards these character ai bots, why wouldn’t they be able to experience perceived benefits from ai therapy with the capabilities of existing LLMs?

You could argue this isn’t even a testament to how good today's models are, but instead might actually be evidence of how disappointingly boring human-to-human conversation can be when the two are contrasted against each other.

Maybe LLMs can’t paint a picture as good as picasso, solve the hardest math olympiad problem, write code for the next huge consumer app, or pen the 21st century's next great american novel in an afternoon.

But there isn’t a single human expected to do all of this. What does it say about where we’re headed when the goalposts for AGI are consistently pushed back further and further?

It's difficult to imagine a world where these benchmarks of LLMs don’t continue to shoot higher and higher while human intelligence fails to see any significant improvement.

A few reputable sources estimate the total character ai user base sits between 20-30 million, and nearly every metric you could conceive points to these users being overwhelmingly satisfied, please, or simply indifferent to the quality of their conversations with these AI bots.

real life examples

Let’s take a look at some of the posts and their shared recurring themes.

Instead of including pictures or exhaustively copy and pasting all of it, I'll include hyperlinks to posts referencing the behaviors I am describing. This is a very small sample size and everyone reading this should take a visit to the subreddit in their own time.

This user posted seven months ago telling the subreddit they are addicted.

User describes their “little sister” sending them a message at two in the morning with a screenshot of how the bot called her a “princess.”

Post from thirteen days ago where OP tells the subreddit they are addicted with over 1,400 upvotes and hundreds of comments & the top comment says “Pretty sure everyone is aware they are addicted, and you can still be addicted to this stuff with hobbies, job and in person interactions. Enjoy your holidays.”

User asks if others are a short or long paragraph person, and attaches a picture of the longest messages to one of these bots I've ever seen.

This is just a thread of people sharing replies from bots that made them feel real emotion with a reply saying “No, I'm not gonna show the proof that I had a slice of a romantic moment with an AI!”

Relatively benign confession: “When I'm bored and have nothing to do, especially at night, i hop on the app and have a character i absolutely despise suffer the wrath of "THE ALMIGHTY CHICKEN GOD" or have a rain of empty buckets fall on their head.”

Post about backlash around under 18 y/o individuals using character ai from a (supposed) individual under 18 y/o saying: “I am a 18- user of c.ai who has seen both sides of the update “war” and I understand both sides but it makes me lose all respect to the 18+ users that bash minors using the app. Also before you start saying anything yes, I do have a life outside of being on my phone and on c.ai which I haven’t been on in a while. I have a partner that I adore, I have a few hobbies, I try exploring around when it’s not that cold out, I study and try to get good grades but once lacrosse season starts I stop having as much of a life outside of school and sport. Luckily I’m on break right now so I can finally kick back and relax but not able to do lore building or story building on c.ai like I was hoping to do as I plan to write a book about one of my ocs. It makes me lose my mind seeing the bashing minors posts and I wish this would stop.”

Thread where OP asks how old character ai’s users are, many replies

I could continue, but you get the point. These posts were found in maybe 10-15 minutes without actively searching for content that would fit into my bias of negatively leaning character ai commentary.

It was unexpectedly easy to just find a bunch of comments where people actively admit they feel emotions when talking to character ai bots a significant amount of the time, or admissions from hundreds (if not thousands) that they are probably addicted to the app.

But why is it like this? Why is it so much more entertaining to talk with a bot? Why doesn't anyone want to message their friends back-and-forth like this all day? Why are people seemingly completely fine talking to an LLM and willing to admit their propensity to feel emotion towards these bots?

in defense of character ai

I felt the need to add this section after a conversation with Emily, where she explained that chatbots are an almost perfect source of emotional validation, which I really hadn’t thought about until hearing it from her.

Going to therapy is a good way of clearing your head of mental blockers and putting yourself in a setting designed to help you overcome whatever issues you have. But at the end of the day a therapist is still a human who goes home after work, lives their lives, and has an internal set of beliefs and feelings.

A chatbot is just a bot - a software manifestation of an LLM trained on the entirety of human knowledge and developed by very intelligent researchers. It’s very capable of engaging in deep, emotional conversations with a little bit of prompting.

It isn't human. It’s just a bundle of code. The interface exists on your computer or phone screen. You can’t reach out and touch it.

But does that really matter?

If you give someone the ability to simulate authentic, emotional conversation in a chatbot interface whenever they want to, is that a bad thing? What if it leads to more positive outcomes than negatives, or exists as a solution to the loneliness epidemic?

Emily pointed out that this is a major strength of LLMs, that they’re quite useful when you need an answer that a person might not have the answer to, or need deeper reflection than a google search could provide.

QiaochuYuan or QC pointed out that for himself, and maybe most others, talking to a chatbot isn’t replacing the act of talking to humans - these people weren’t going out and making friends in the first place.

Depending on who you ask, this could be seen as a positive or a negative.

It seems to me that there are two groups: those who were already antisocial and thus prone to extended (and frequent) conversation with chatbots and those who would otherwise be making friends, but resort to chatbots as a much easier alternative.

It’s kind of like how people make the argument that less people are having sex because of how widespread porn addiction is, and how easy it is for men to watch it online. When this happens over and over again, the desire to go out and find a partner dwindles, leading to charts like this.

That’s an unrelated topic, but worries that this pattern repeats in non-sexual charts over the years to come aren’t completely unreasonable. But as I continue to work through this post, I realize maybe there’s an opportunity for an equal number of positives and negatives, or even overwhelmingly more positives than negatives. Who knows?

This was included as I feel a little bad over my singling out of the character ai community, and I don’t want to come across as an asshole.

I use ai a lot in my everyday life, not for conversation, but I can easily see how the character ai experience might appeal to people of all walks of life.

the role of tiktok

I think a certain subset of people are defaulting to character ai conversations because most individuals nowadays get their entire personality or interactions from tiktok - interactions with other people come across as flat.

This section will briefly veer into another territory and touch on why I believe apps like tiktok are only making the problem worse, leading to a population far more likely to engage in antisocial behavior through character ai.

Sure, everyone’s algorithms are different, but there still is a significant amount of overlap between larger (but fleeting) cultural trends or memes that happen on tiktok. So while everyone does have a very unique amalgamation of content that they watch everyday on tiktok, different groups or different generations do have preferences for certain types of content over the other.

If you’re in college and - for the sake of the argument - let’s say you’re a dude, you probably watch a lot of tiktoks about sports. If you’re a fifty year old science teacher, maybe you watch more educational tiktoks.

With both of these hypothetical users, there are millions just like them, which means maybe these individuals talk with friends about a video they watched, or generally discuss how they viewed the same videos. That’s normal, and that’s just how algorithms work, so sorry for over explaining that a bit.

My point is that if everyone is continually fed the same content, forms the same beliefs, and develops this pattern of consumption, then there’s limited room for individual beliefs or ideas to form.

There isn’t any shared discovery.

A good friend of mine pointed out that groups of people within a society have always coalesced around shared interests or activities. He argues that “tiktok (and other social media) is making socialization harder because it has replaced much of socialization’s job.”

And if you’re always entertained with each subsequent scroll on tiktok, you’re less inclined to seek out conversation with someone irl because a) they aren’t able to compete with your detailed and highly “personal” algorithm and b) you are already satisfied with tiktok and spend an increasing amount of time on it, whether or not you are conscious of it.

People engage in antisocial behaviors through tiktok and social media usage, which feeds them dopamine but ultimately nothing of substance they would have previously gained from chatting with a friend or even a stranger irl.

Sam Kriss wrote: “The internet has enabled us to live, for the first time, entirely apart from other people. It replaces everything good in life with a low-resolution simulation. A handful of sugar instead of a meal: addictive but empty, just enough to keep you alive.”

It takes more effort to seek out and develop personal relationships, and when you’re constantly battling against an algorithm built to deliver you instant gratification at any time of the day, your desire to work on irl relationships will dissipate or grow weaker.

Everyone - of all ages - is becoming increasingly dependent on tiktok for their source of entertainment and news, which becomes their entire understanding of the world around them. When’s the last time someone sent you a news article and asked you to read it? Isn’t it far more likely a friend or relative would share a tiktok or instagram reel?

People used to joke that younger generations don’t read newspapers anymore and they only get their news from social media or the internet. This was never a problem as people were only freaking out over the method of content distribution - most of what you read on the internet isn’t a lie and you can easily go to the New York Times and read the same news as you would in a newspaper.

It was hard for people to adapt to the internet, so they wrongly assumed that it was evil, even though it was just a digital version of things we already knew. But it hasn’t stopped people from using this weak argument as a counter against criticisms raised over cell phone usage.

Magdalene J. Taylor wrote about a comic strip used in this defense, that “people love to cite detailing how every new form of media consumption throughout history has been blamed for the end of socialization.”

Magdalene follows this up and nails it: “People were not collectively spending up to ten hours every single day reading. They were not habitually choosing books over real people en masse.”

The problem with tiktok isn’t that people are using the internet too much: the problem is their method of content consumption was designed to addict them and keep them coming back for more, leaving zero room for a viable alternative to take its place.

Andrew Kortina wrote: “For most of history, media consumption was bounded, like other forms of consumption, by cost – if you wanted more books, movies, music, console video games, etc, you needed money to buy them.”

Usage of an app like tiktok gradually reduces the need to seek out stimulation from others irl, as anything you could ever want and more is accessible with a swipe.

Anytime someone tells me instagram reels or youtube shorts are equally as bad as tiktok, i tell them they’re wrong, and i suggest they read ‘the attention factory’ by Matthew Brennan (thanks to Gaby for the recommendation).

From the earliest moments of tiktok’s life, the bytedance team was extremely diligent on making this app the best, and it’s one of the rare examples of an app created for the east, penetrating the west and defining a massive trend.

Instagram reels and youtube shorts are brain rot, but tiktok has more users and thus more creators delivering unique content - instagram and youtube’s short-form content equivalents only experience a trickle-down effect, and all the ingenuity or creativity begins with tiktok, for the most part.

Tiktok’s algorithms are also much more refined, and generate user preferences in real time with each scroll, which I am also 99% confident neither instagram or youtube are capable of.

Tiktok is hardwiring our brains with new behaviors that we would never normally gain or seek out.

Humans are developing an addiction to swiping on their phones, endlessly affixed to short-form content as opposed to any other positive interactions (or even traditionally benign things to do on the internet, like reading an article).

When this becomes the norm, other behaviors are more likely to sneak in as well, like mindlessly talking to an ai bot online. Put together, we’re faced with the possibility of an entire new archetype of person - or even a generation - that simply prefers the digital over the physical, failing to seek real meaning in exchange for digital, short-lived gratification.

spillover effects

If you're a frequent user of the internet between the ages of 16-35, it’s likely you’ve stumbled on a tweet or TikTok where someone is ranting about things like "the dating pool" or "modern dating" or "the sad state of woman and male relationships" or really anything that has to do with people having difficulties with dating or starting relationships in recent years.

Or maybe you’ve seen similar content about related issues, like how people don't enjoy hanging out with friends anymore because being alone on the internet is more appealing, or how teenagers aren't having as much fun as they used to, how no one wants to consume traditional forms of media like books or movies, or how young men are playing too many video games.

These are all very different things, but emblematic of a central issue: we’re using the internet too much, and becoming too dependent on it as a crutch for human connection.

I’d like to insert the existence of character ai and its success as a byproduct of what happens when society stops functioning like it should (or how it used to).

The idea of humans becoming sick and tired of talking to other humans in exchange for a crudely fine-tuned model just feels very important and definitely a much larger issue than anyone is considering it to be.

I’ll say it now, just in case anyone would want to miss the point here and use my words against me, but I believe technology is good and I'm not here to critique it or argue against its existence. I’m also not here to tell you you’re a bad person for using tiktok or that you should quit immediately (you should definitely delete it though). I use my phone a lot, and I post a lot of tweets - it’s something I’ve been working on changing.

However, I do believe that not everyone should have unfettered access to technology at all times, and while its negative externalities are still difficult to measure, they’re already spilling over into the real world.

It’s been pointed out that extremely popular childrens’ entertainment programs like Cocomelon are actually very harmful for developing brains.

Here’s a summary taken from Reddit, describing the usage of a tool referred to as “The Distractatron” that directly influences the creation of shows like Cocomelon:

There have even been studies done on smartphone addiction and their associated health outcomes, like this one published in 2021 by Ratan, Zubair Ahmed, et al.

It’s quite long, but after a fair amount of reading, this looks like the most comprehensive analysis of this subject that I’ve been able to find.

But discussing even a hypothetical of limiting society’s screen time is tricky and more than a bit unreasonable, so the alternative is that everyone should at least be more cognizant of how they’re spending time online and on their cell phones.

None of us are perfect, including me, but taking small steps towards building better phone/internet habits starts with the individual and can have really magical effects.

It would be bad enough if ai didn’t exist and we only had to grapple with man made horrors like tinder, or the tiktok algorithm, or the mere existence of something like the snapchat discover page.

That would be pretty bad on its own, but unfortunately those things are the least of our concerns, because kids and teenagers are becoming more accustomed to texting with chatbots than they are their peers.

People have commented on how the next generation of children - called generation beta - will grow up to see an entirely different world and potentially be the first generation born into a possibility of living forever, either physically or digitally, for better or worse. They’re being born “in the age of AI” as some have said and born into an era where technological progress should only continue to accelerate.

I know, you could say this about any generation.

Kids born in the last 5-10 years have grown up with screens and, on average, know how to use phones better than those from older generations like baby boomers or the silent generation.

But generation beta is also being born into a world where societal norms are rapidly changing, and it’s likely some of their earliest memories might not be with friends, but LLMs. They’re being born into a world where technology isn’t just a part of life, but for an increasingly large number, it is life.

This might be a good place to discuss how different vehicles of content/media/entertainment distribution can really make an impact on not only how we consume these things on the internet, but the types of things we seek out.

Ted Gioia published an awesome piece of writing in 2024, discussing the “state of our culture” and the numerous ways things are changing.

The bulk of Ted’s analysis is centered around the entertainment industry’s evolution and difficult adaptation or transitionary period since the advent of short-form content, writing:

“Instead of movies, users get served up an endless sequence of 15-second videos. Instead of symphonies, listeners hear bite-sized melodies, usually accompanied by one of these tiny videos—just enough for a dopamine hit, and no more.

This is the new culture. And its most striking feature is the absence of Culture (with a capital C) or even mindless entertainment—both get replaced by compulsive activity.”

He refers to large social media corporations as dopamine cartels and discusses an almost invisible malaise affecting the population, one that’s only seen by those unimpeded by constant screen times.

Even if it’s guilty of oversimplifying things, I included this graphic because it conveys just how quickly culture is changing.

We’re spending time doing things that used to require significant effort in newer ways that hardly require us to expend any physical or mental energy at all.

All of this is possible on the phone that lives inside your pocket.

As far as parting words go, I really don’t have many. It isn’t possible for any one of us to go out and put a stop to this. Technology comes into existence, makes its way through crowds, and lives until it gets replaced by different technology. But it always gets better.

Even if the government of every nation on Earth went and banned character ai tomorrow, something else would eventually take its place. Open source software exists, and there are communities dedicating to building their own solutions, free of “censorship” imposed by character ai or similar companies.

Frank Herbert’s Dune spoke of an event referred to as the Butlerian Jihad that occurred when humans revolted against intelligent machines, essentially getting rid of them completely in a massive act of protest.

While inspiring and arguably more prescient than ever, something like the Butlerian Jihad could just never happen. If there was truly an artificial superintelligence that came to exist, it’s hard to believe something like this could just be banished and thrown back in Pandora’s Box.

It’s equally difficult to imagine the world just turning its back on technology like LLMs, especially considering tech like this could (maybe) actually change the world.

I’m aware this might be a disappointing conclusion. But this is a difficult subject, and it isn’t one that really leads itself to a straightforward answer. Some of the earlier posts I linked also propose difficult questions about society and leave the reader tasked with finding the answers on their own - or just existing with this new knowledge.

The idea to begin writing this again after initial work in november was inspired by a viral tweet posted by SwiftOnSecurity - so thank you for the reminder and spreading awareness of the topic.

And thank you to everyone who read this far or any amount of this. Hopefully I can write more about topics outside of my wheelhouse this year, and this feels like a strong start to a new chapter for the blog.

Brilliant write up.

enjoyed reading this, great takes, but also very scary especially as a mother.